Top scientist: Big tech distracting attention from AI’s existential threat

Big tech companies have effectively diverted global attention away from the ongoing existential threat that AI continues to present to humanity, a prominent scientist of artificial intelligence says.

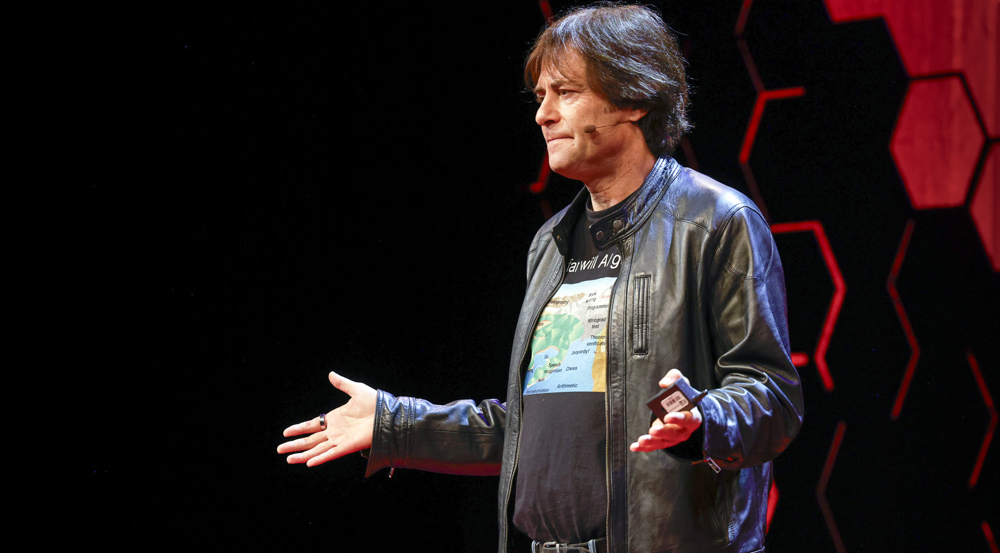

Max Tegmark told the AI Summit in Seoul that the shift in focus from the extinction of life to a broader conception of safety of artificial intelligence risked an unacceptable delay in imposing strict regulation on the creators of the most powerful programs.

Tegmark, who trained as a physicist, recalled 1942 when Enrico Fermi built the first ever reactor with a self-sustaining nuclear chain reaction under a Chicago football field.

“When the top physicists at the time found out about that, they really freaked out, because they realized that the single biggest hurdle remaining to building a nuclear bomb had just been overcome. They realized that it was just a few years away – and in fact, it was three years, with the Trinity test in 1945.”

In Seoul, just one out of the three “high-level” groups focused on safety as a direct concern, examining a wide range of risks, “from privacy breaches to job market disruptions and potential catastrophic outcomes”.

Tegmark contends that downplaying the most serious risks is not beneficial, and is not coincidental.

“That’s exactly what I predicted would happen from industry lobbying,” he said.

“In 1955, the first journal articles came out saying smoking causes lung cancer, and you’d think that pretty quickly there would be some regulation. But no, it took until 1980, because there was this huge push to by industry to distract. I feel that’s what’s happening now.”

Columbia president quits amid Trump’s crackdown on pro-Palestinian activism

Academy faces backlash for failing to support Oscar-winning Palestinian filmmaker

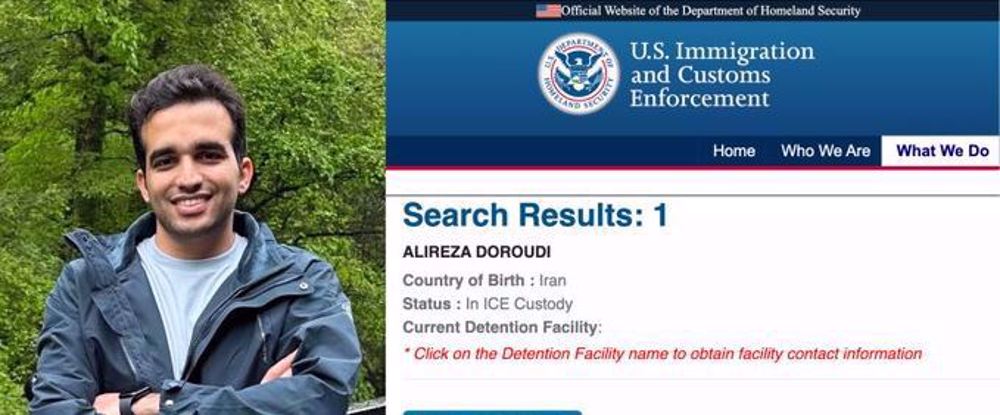

US agents conduct 'off campus' arrest of Iranian PhD student

VIDEO | UK economy reels from impact of Trump Tariffs

UNRWA chief slams Israel’s attacks on UN facilities

VIDEO | The story of Heyam: nowhere is like Gaza!

Israeli warplanes carry out more airstrikes near Damascus

VIDEO | 'War Criminal' welcomed

Denmark's PM visits Greenland after Trump threat to seize it

Pezeshkian: If Muslim nations unite, enemies cannot oppress them

Hundreds of thousands of Gazans flee as Israel ‘wipes out’ Rafah

This makes it easy to access the Press TV website

This makes it easy to access the Press TV website