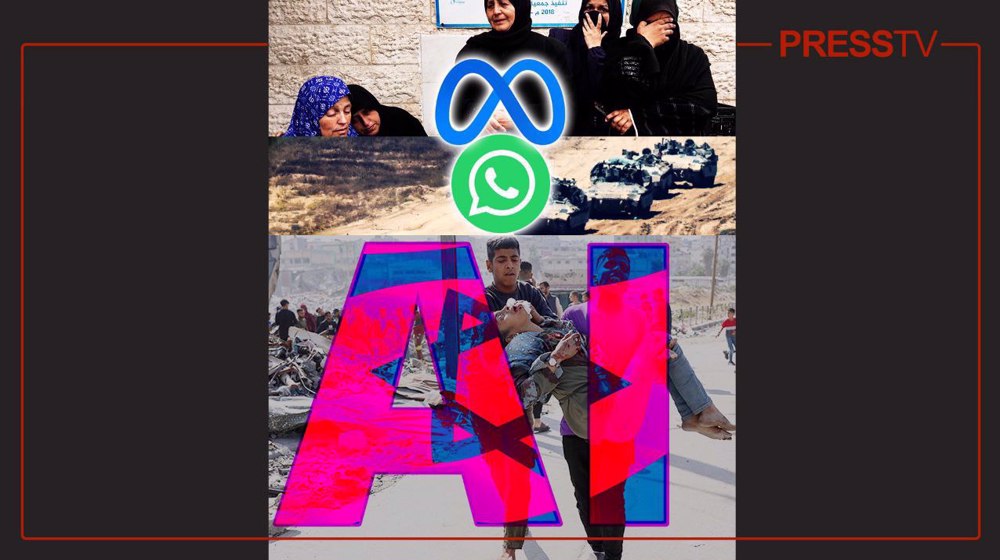

Factbox: Is Meta facilitating Israel’s AI-aided bombing of Palestinians in Gaza

By Maryam Qarehgozlou

A distant rumbling growing louder warns of a menacing airstrike, and suddenly the sky tears open with the deafening roar of engines and the piercing whistle of falling bombs.

Explosions send shockwaves through the air, shattering windows, and tearing apart walls.

Debris is raining down and the air is thick with smoke. Survivors emerge from the rubble, dazed and bloodied, searching frantically for loved ones amid the relentless cacophony of death and destruction.

This is the grim and gory reality unfolding across Gaza due to Israel’s genocidal war on the besieged territory which has killed more than 34,500 Palestinians, two-thirds of whom are women and children.

According to recent reports, Israel has been indiscriminately targeting Palestinians in the narrow strip using its artificial intelligence (AI)-based programs such as “Lavender,” “Gospel,” and “Where’s Daddy” using information partly provided by Meta’s messaging app WhatsApp.

The revelation has again exposed deep linkages between the apartheid regime and social media giants, in this case, Meta, formerly known as Facebook, headquartered in California, United States.

How do Israeli AI killing machines work?

Lavender is a “target machine,” based on AI and machine-learning algorithms, which is fed with inputs including the characteristics of prominent Hamas and Islamic Jihad resistance fighters as training data, and then it can locate these same characteristics or what is called “features” among the general population, according to a recent investigation by +972 Magazine and Local Call.

These features, such as being in a WhatsApp group with a known resistance fighter, changing cell phone every few months, and changing addresses frequently can increase an individual’s rating, the report published earlier this month stated.

The Lavender machine joins another AI system known as Gospel. While Lavender marks people and puts them on a kill list based on its training dataset, Gospel marks buildings and structures that the Israeli military claims resistance fighters, including low-ranking ones, operate from.

‘Where’s Daddy’ tracks targets and signals to the Israeli military when suspects enter their family homes.

Six unnamed sources who served in the Israeli military during the current war on the Gaza Strip told +972 Magazine and Local Call that Lavender has played a “central role” in the unprecedented bombing of Palestinians, especially during the onset of the current war.

According to these sources, while Lavender makes “errors” in approximately 10 percent of cases by marking individuals who have merely a loose connection to resistance groups, or no connection at all, human personnel often served only as a “rubber stamp” for the machine’s decisions.

The outputs provided by the machine were treated “as if it were a human decision,” they said, adding it took them about “20 seconds” before authorizing a bombing — enough to make sure the Lavender-marked target was male.

Moreover, the report revealed that, due to what they called an “intelligence standpoint,” the military preferred to locate the individuals in their private houses, using automated systems such as “Where’s Daddy” to track the targeted individuals and carry out bombings in their family residences.

“The IDF [Israeli military] bombed them in homes without hesitation, as a first option. It’s much easier to bomb a family’s home. The system is built to look for them in these situations,” one of the sources said.

Other sources cited in the report also said that in some cases after the Israeli military bombed a family’s private home, it turned out that the intended target of the assassination was not even inside the house, since no further verification was conducted in real-time.

And it is because of the AI program’s erroneous decisions that thousands of Palestinians — most of them women and children or people who were not involved in the fighting — were wiped out by Israeli airstrikes, especially during the first weeks of the war that started on October 7, 2023.

According to the figures shared by the United Nations on November 20, 2023, 45 days into the war, more than half of the fatalities — 6,120 people — belonged to 825 families, many of whom were completely wiped out while inside their homes.

Targeting with unguided ‘dumb’ bombs

The report also highlighted that the Israeli military used its unguided missiles, commonly known as “dumb” bombs (in contrast to “smart” precision bombs) to target junior resistance fighters.

These bombs are known to cause more collateral damage than guided bombs.

“You don’t want to waste expensive bombs on unimportant people — it’s very expensive for the country and there’s a shortage [of those bombs],” one of the sources cited in the report stated.

Two of the sources said that for every “suspected” junior Hamas resistance fighter that Lavender marked, the Israeli military permitted the personnel to kill up to 15 or 20 civilians, which the sources claimed was unprecedented in the past.

“In the past, the military did not authorize any “collateral damage” during assassinations of low-ranking militants,” they said.

However, in the case of senior Hamas officials, the Israeli military on several occasions authorized the killing of more than 100 civilians, they added.

Ata Mirqara, a veteran journalist, told Press TV Website that the dehumanizing language spilling out of Israel has helped to create a climate in which such horrendous crimes can take place.

“While some might find it shocking or unprecedented, treating Palestinians as numbers and dehumanizing them is Israel’s special modus operandi to neutralize public outrage at what amounts to the worst ethnic cleansing in contemporary history,” said Mirqara.

Fighting “human animals,” making Gaza a “slaughterhouse,” and “erasing the Gaza Strip from the face of the earth,” are among the inflammatory rhetoric and a key component of the language used by Israeli leaders about Palestinians in Gaza since the war began and is proof of Israel’s intent to commit genocide in the Strip, Mirqara added.

Is Meta complicit in Israel’s AI-assisted genocide?

Earlier this month, Paul Biggar, a New York-based software engineer and blogger, brought into the spotlight a “little-discussed detail” in +972 Magazine and Local Call article about Lavender and how it is killing Palestinians based on the data Meta’s WhatsApp is probably sharing with it.

Based on the +972 article, Israel’s AI system’s inputs can include being in a WhatsApp group with a suspected member of Hamas.

“There are many possible scenarios, including employees colluding with Israel, known or unknown security vulnerabilities, and failures of the WhatsApp product to prioritize protecting its users against this in various ways,” Biggar told the Press TV Website in an email.

However, Biggar, the founder of Tech for Palestine, stated that it so not clear whether Meta is “directly facilitating” the “genocide” in Gaza or not.

Meta did not immediately respond to a request for comment from the Press TV Website.

Earlier, a Meta spokesperson told Middle East Monitor concerning the recent speculation that the tech giant has no information that these reports are accurate.

“WhatsApp has no backdoors and we do not provide bulk information to any government. For over a decade, Meta has provided consistent transparency reports and those include the limited circumstances when WhatsApp information has been requested,” the spokesperson said.

The spokesperson added that Meta’s principles are “firm” and it carefully “reviews, validates and responds to law enforcement requests based on applicable law and consistent with internationally recognized standards, including human rights.”

Biggar, however, told the Press TV Website that Meta’s statement on the matter tells little if nothing about the severity of the situation, and implies they don’t intend to do anything about it.

“Meta’s answer fundamentally does not address whether their users were harmed and continue to be harmed, and their lack of response on this is shocking considering the allegations. This is a deflection, and the summary is that they plan to do nothing at all, not even investigate the claims, a complete dereliction of duty to their users,” he warned.

According to Biggar, if Meta is providing Israel with users’ data, it could pose a threat to the lives of people in Gaza.

“This doesn’t just threaten the security and privacy of users in Gaza, it threatens their lives, and it threatens the lives of any WhatsApp users in a similar oppressive regime, which includes most of the world.”

Why Meta may be aiding the Gaza genocide?

There are precedents for Meta’s pro-Israel bias. At earlier stages of the war, rights groups revealed that Meta had engaged in a “systemic and global” censorship of pro-Palestinian content since the war started.

Moreover, in February, Meta announced it was “revisiting” its hate speech policy around the term “Zionist,” which also sparked concern about further censorship of pro-Palestinian content.

In 2017, Sheryl Sandberg, the then-chief operating officer (COO) of Meta and current Meta board member, said that the metadata provided by WhatsApp has the potential to inform governments about possible terrorist activity.

Biggar told the Press TV Website that the leadership of Meta themselves is “extremely sectarian” and pro-Israel.

“They have a major center in Tel Aviv and hundreds of Israeli employees and dozens of former Unit 8200 employees,” he said, mentioning the infamous Israeli military intelligence department that surveils Palestinian electronic communication.

According to Biggar, three of Meta’s most senior leaders have close ties to Israel including Chief Information Security Officer (CISO) Guy Rosen, who also served in the Israeli military in Unit 8200 and is one of the most powerful people in the company, as it relates to content policy.

Mark Zuckerberg, Meta founder and CEO, and Sandberg have been also actively pushing false Israeli propaganda including the discredited “Hamas Oct. 7 mass rape” hoax, he added.

Biggar also said Zuckerberg donated $125k to Zaka, an ultra-Orthodox search-and-rescue organization, which created and continues to spread much of the false atrocity propaganda peddled against Hamas.

“This allyship with Israel from the most senior parts of Meta’s governance – CISO, CEO, and board member – sheds light on why Israel’s military is able to get this information from WhatsApp, a supposedly “private” app,” Biggar said.

Iranians begin voting in snap presidential election

VIDEO | Press TV's news headlines

Hezbollah unleashes dozens of rockets onto Israeli military base

VIDEO | French protest nationwide to keep National Rally from winning parliament

‘Spying on ICC’: Netherlands summons Israel envoy for explanation

VIDEO | Haifa comes under fresh joint attack by Yemeni forces, Iraqi fighters

VIDEO | Iran presidential election

Iran election abroad: Iranians living overseas ready to exercise their voting rights

This makes it easy to access the Press TV website

This makes it easy to access the Press TV website