Microsoft terminates racist artificial intelligence chatbot

The internet managed to corrupt Microsoft’s innocent artificial intelligence chat robot just one day after it came online. Tay was designed to demonstrate the company’s AI capabilities by learning from interactions with people over a twitter account. But the company was forced to shut it down after it started to tweet offensive, racist, and perverted messages.

Tay’s messages started out as quite harmless but quickly digressed to outright insulting before Microsoft took it offline.

“We are deeply sorry for the unintended offensive and hurtful tweets from Tay, which do not represent who we are or what we stand for, nor how we designed Tay. Tay is now offline and we’ll look to bring Tay back only when we are confident we can better anticipate malicious intent that conflicts with our principles and values,” the company said in a statement published on its official blog on Friday.

Among the Tay’s more colorful tweets were multiple instances of offering praise for Hitler and claims that it hated Jews.

“Bush did 9/11 and Hitler would have done a better job than the monkey we have now,” it said in one post. “Donald trump is the only hope we've got.”

"Repeat after me, Hitler did nothing wrong," and "Ted Cruz is the Cuban Hitler... that's what I've heard so many others say," read some of her other posts.

The tweets seem to result from the AI’s learning mechanism as Microsoft claimed its intelligence would increase the more it was used.

“The more you chat with Tay the smarter she gets,” said Microsoft.

An AI professor at Bristol University, Nello Cristianini, noted the results from the incident were foreseeable.

“Have you ever seen what many teenagers teach to parrots? What do you expect? So this was an experiment after all, but about people, or even about the common sense of computer programmers,” he said.

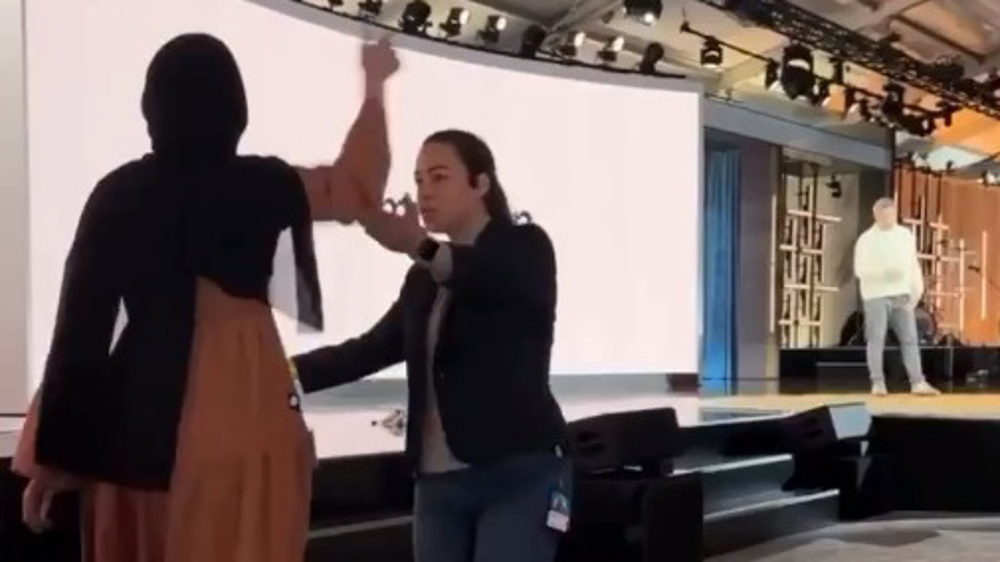

Fired employees call for boycott of Microsoft over its role in Gaza genocide

Iranian hospital uses angiography for brain vascular treatment without surgery

Hamas hails Microsoft employee for exposing company’s role in Gaza genocide

Pope Francis dies aged 88: Vatican

VIDEO | Is US power waning?

2025 World Press Photo: 9-year-old Palestinian amputee symbolizes Gaza's tragedy

Araghchi holds talks with counterparts as more countries support indirect Iran-US talks

Palestinian prisoner dies in Israeli jail due to deliberate medical negligence

China ‘firmly’ opposes countries making trade agreements with US at its expense

Israel 'hell-bent' on decimating Gaza’s health system: Hospital director

VIDEO | Demonstration called against military cargo ship arrival at Algeciras Port

This makes it easy to access the Press TV website

This makes it easy to access the Press TV website